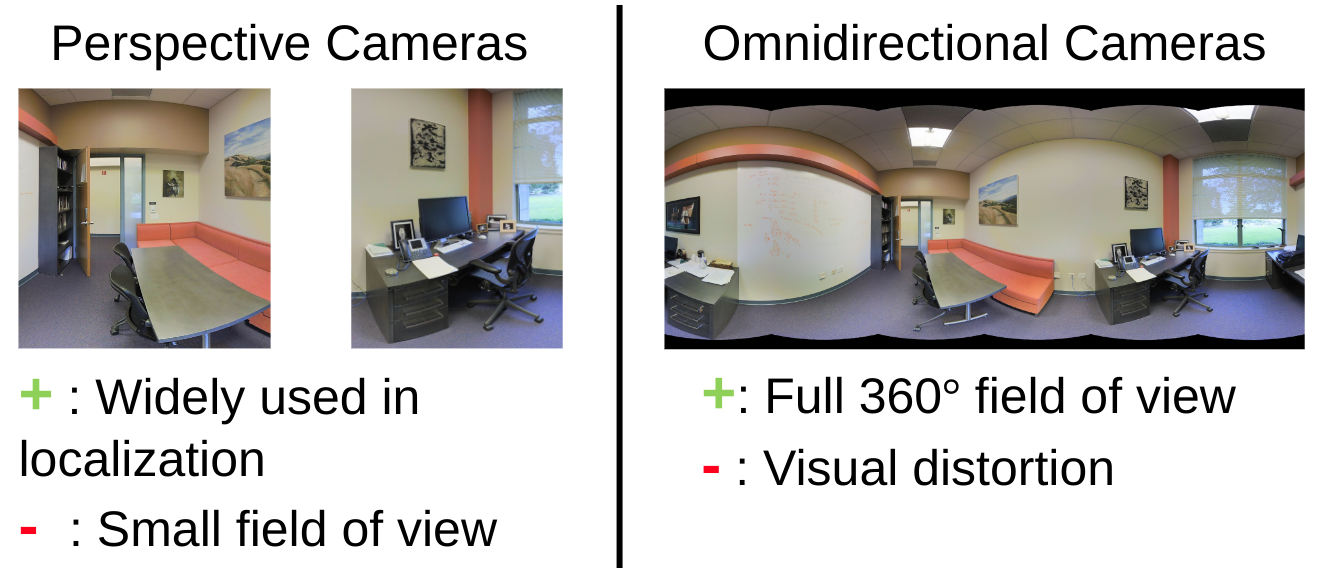

We present PICCOLO, a simple and efficient algorithm for omnidirectional localization. Given a colored point cloud and a 360 panorama image of a scene, our objective is to recover the camera pose at which the panorama image is taken. Our pipeline works in an off-the-shelf manner with a single image given as a query and does not require any training of neural networks or collecting ground-truth poses of images. Instead, we match each point cloud color to the holistic view of the panorama image with gradient-descent optimization to find the camera pose. Our loss function, called sampling loss, is point cloud-centric, evaluated at the projected location of every point in the point cloud. In contrast, conventional photometric loss is image-centric, comparing colors at each pixel location. With a simple change in the compared entities, sampling loss effectively overcomes the severe visual distortion of omnidirectional images, and enjoys the global context of the 360 view to handle challenging scenarios for visual localization. PICCOLO outperforms existing omnidirectional localization algorithms in both accuracy and stability when evaluated in various environments.

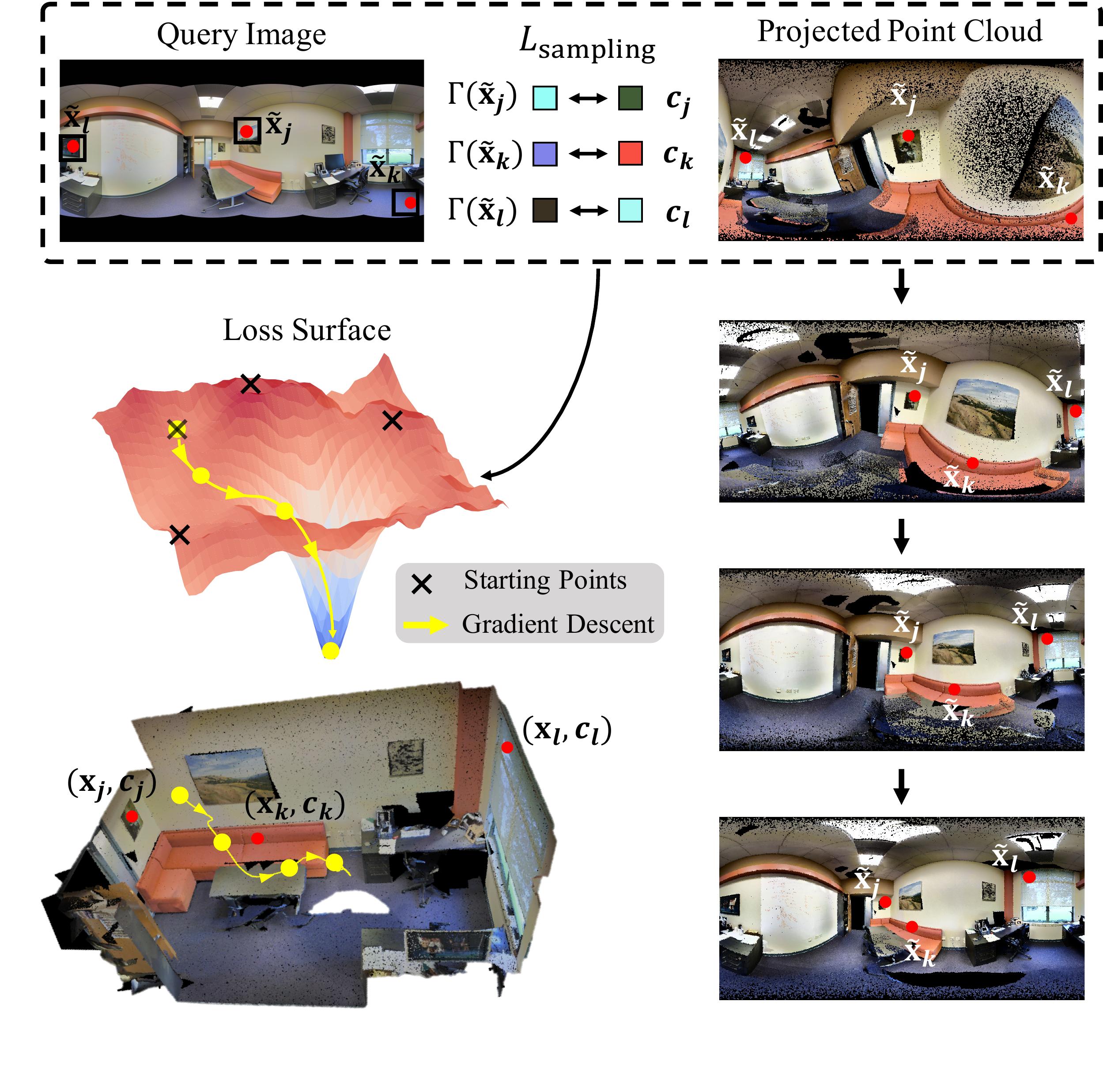

While perspective cameras are more widely used for localization, omnidirectional cameras have the potential to enable robust localization due to their large field of view. Despite the benefit however, the cameras have visual distortions that makes it difficult to apply existing localization algorithms developed for perspective cameras.

Given a omnidirectional image and a colored point cloud, PICCOLO aims to find the 6DoF camera pose. Unlike existing works in visual localization, we assume no global / local features to be cached prior to localization, and only exploit the color information for pose estimation.

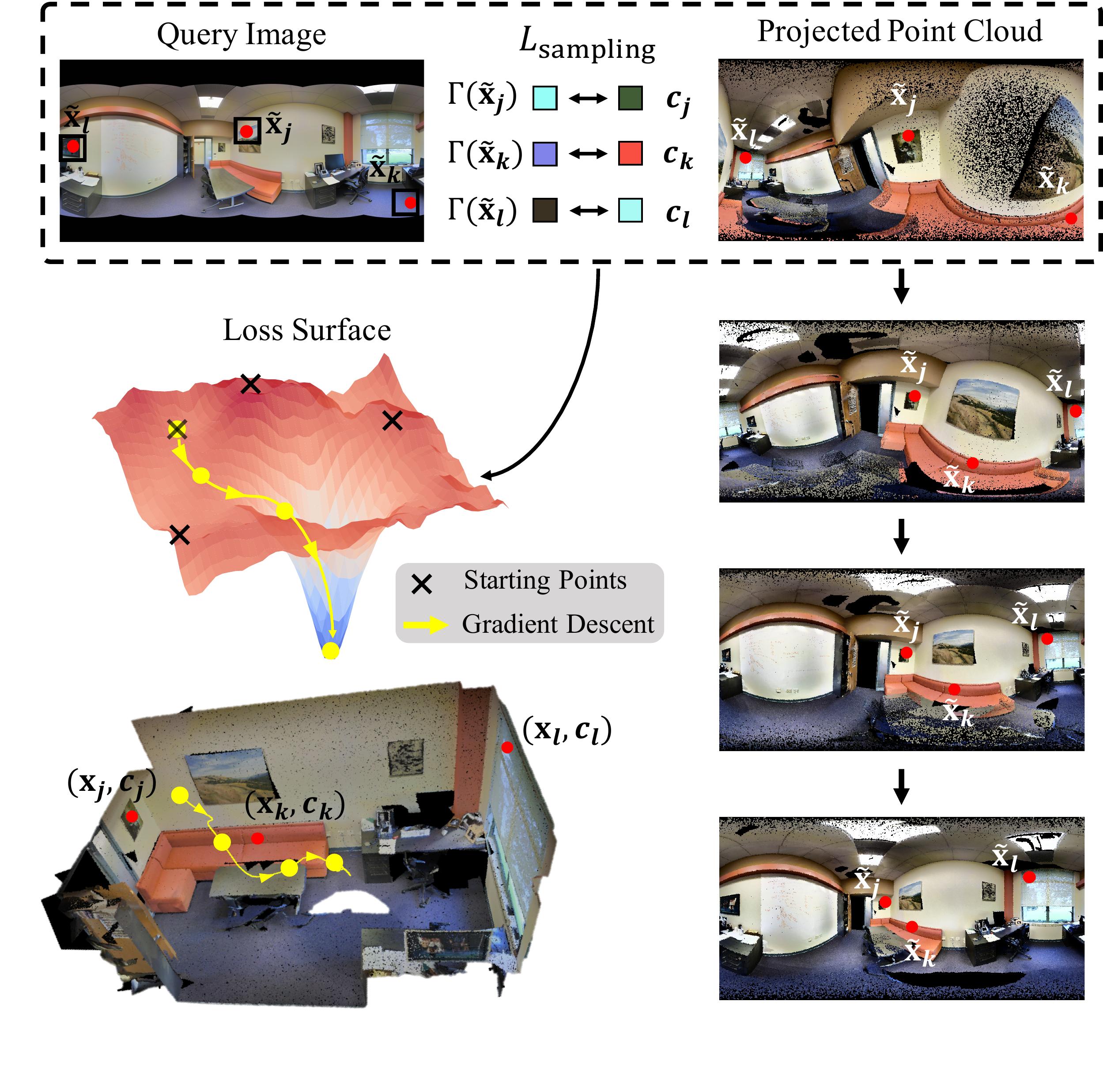

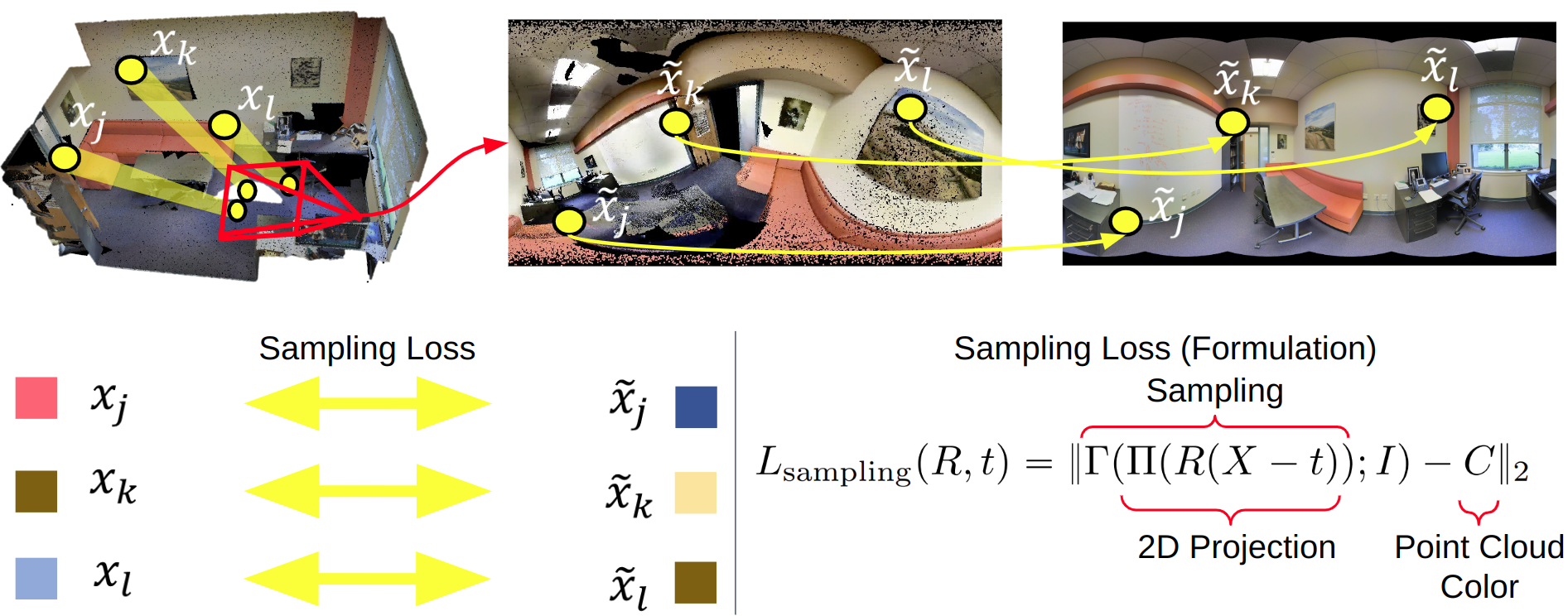

PICCOLO formulates omnidirectional localization as an optimization problem: the method minimizes a novel loss function called sampling loss. Given a candidate pose as shown above, sampling loss measures the discrepancy between the point cloud colors and the sampled location's image color. The loss is fully differentiable, and thus can be minimized using gradient descent optimization.

Here’s how gradient descent steps update the projected point cloud, which eventually converges to match the query image. We illustrate the trajectory of one starting point, but usually multiple starting points are optimized. Note that even if we start from a fairly far away position to the ground truth, sampling loss can reliably converge to the accurate location.

Unfortunately, sampling loss may contain spurius local minima. To escape local minima, PICCOLO first selects decent starting points from various candidate locations within a scene. Specifically, we make uniform grid partitions of the point cloud and compute the sampling loss values for each grid. Then, we select the top-K locations with the smallest sampling loss values, which are further refined by minimizing sampling loss in a continuous fashion.

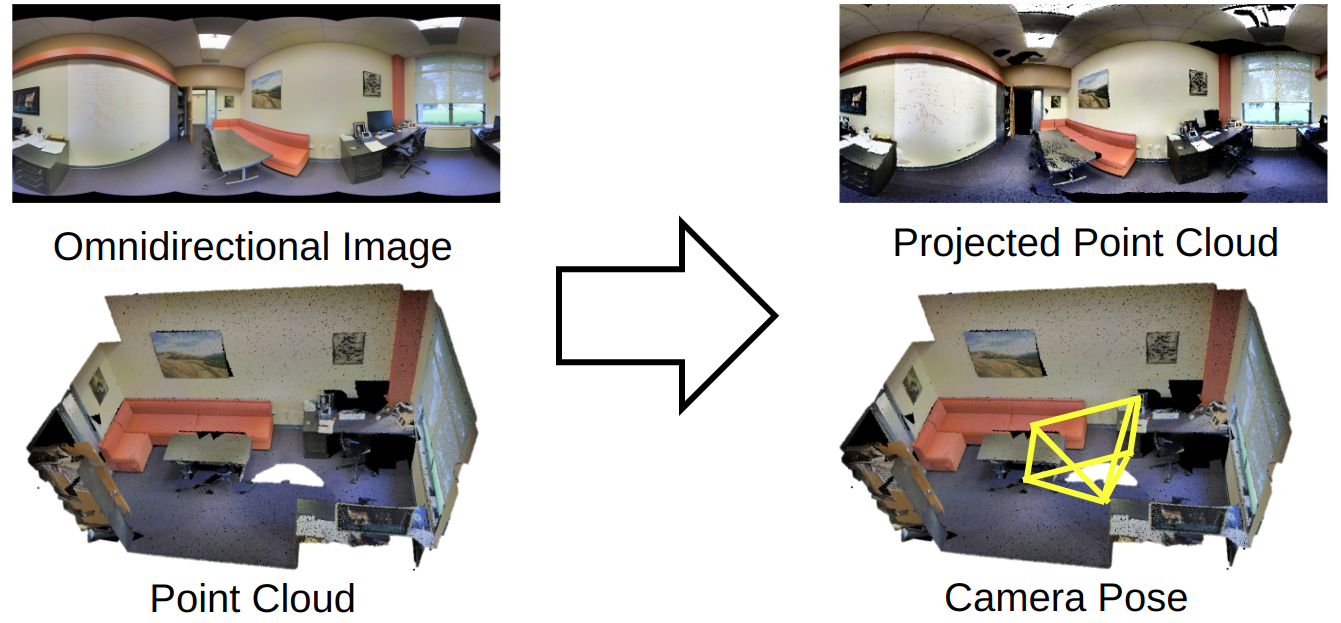

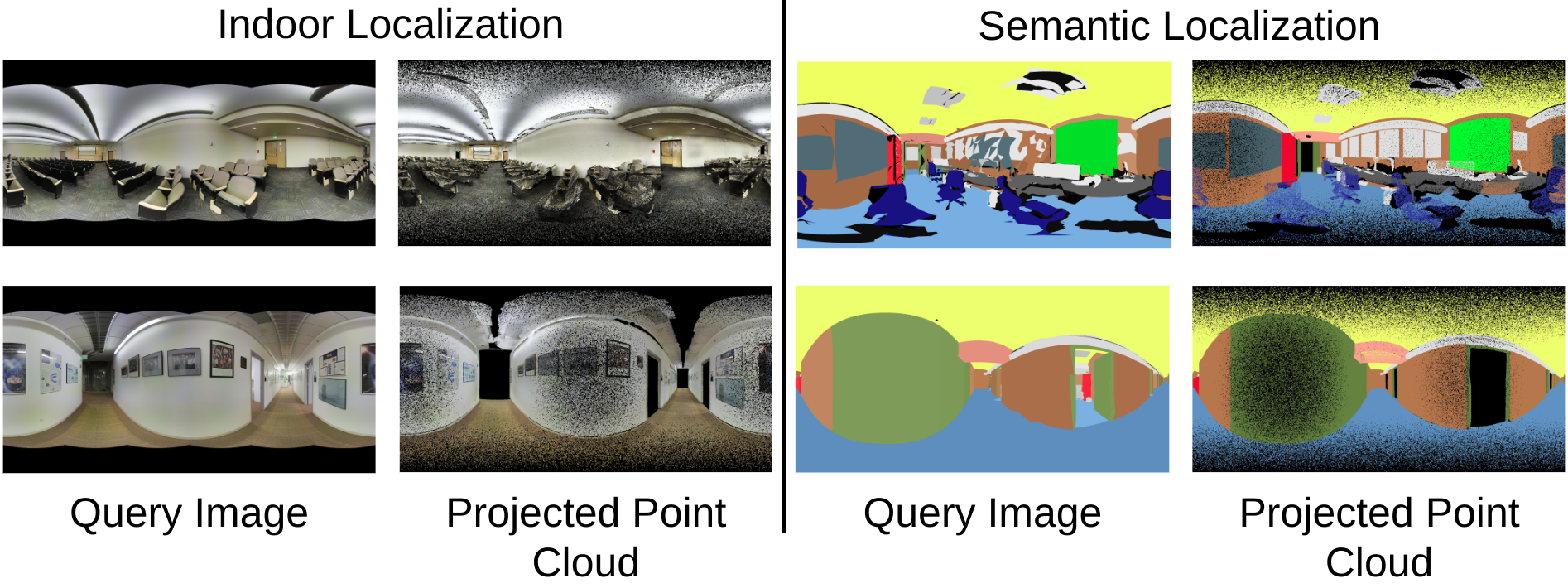

PICCOLO can localize in a wide range of scenarios where omnidirectional cameras are present. Despite the repetitive chairs or textureless walls in the left, PICCOLO can perform accurate localization. PICCOLO is also capable of handling input modalities other than color images, such as semantic labels. Although such modalities lack visual features, PICCOLO can successfully find the camera pose.

@InProceedings{Kim_2021_ICCV,

author = {Kim, Junho and Choi, Changwoon and Jang, Hojun and Kim, Young Min},

title = {PICCOLO: Point Cloud-Centric Omnidirectional Localization},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {3313-3323}

}