The absolute depth values of surrounding environments provide crucial cues for various assistive technologies, such as localization, navigation, and 3D structure estimation. We propose that accurate depth estimated from panoramic images can serve as a powerful and light-weight input for a wide range of downstream tasks requiring 3D information. While panoramic images can easily capture the surrounding context from commodity devices, the estimated depth shares the limitations of conventional image-based depth estimation; the performance deteriorates under large domain shifts and the absolute values are still ambiguous to infer from 2D observations. By taking advantage of the holistic view, we mitigate such effects in a self-supervised way and fine-tune the network with geometric consistency during the test phase. Specifically, we construct a 3D point cloud from the current depth prediction and project the point cloud at various viewpoints or apply stretches on the current input image to generate synthetic panoramas. Then we minimize the discrepancy of the 3D structure estimated from synthetic images without collecting additional data. We empirically evaluate our method in robot navigation and map-free localization where our method shows large performance enhancements. Our calibration method can therefore widen the applicability under various external conditions, serving as a key component for practical panorama-based machine vision systems.

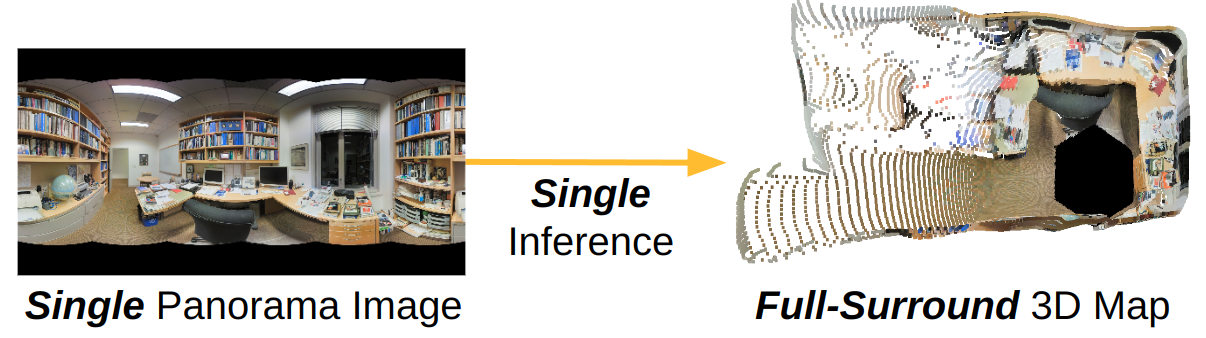

Given a single panorama image, panoramic depth estimation outputs a dense depth map from pre-trained neural networks. Since a full-surround 3D map is obtainable from a single neural network inference, panoramic depth estimation can enable light-weight 3D map creation.

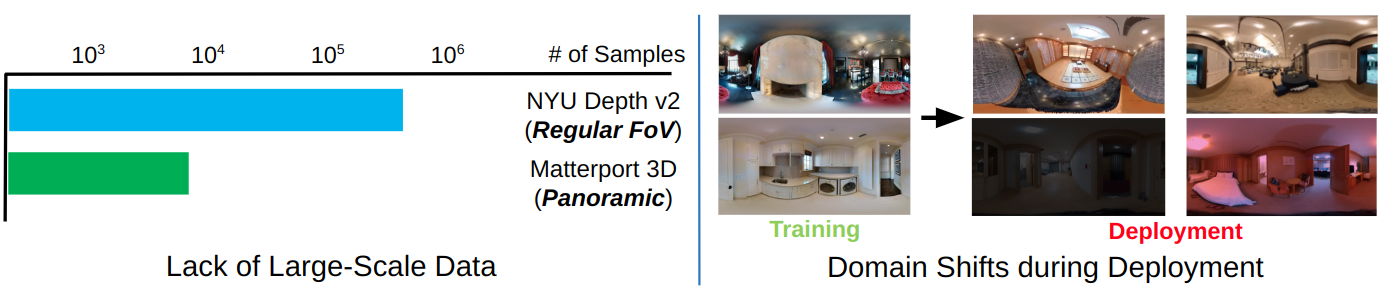

One of the key challenges in panoramic depth estimation is the small size of depth-annotated panoramic datasets compared to the regular field-of-view counterparts. As a result, existing panoramic depth estimation methods fail to generalize in domain shifts that occur during deployment.

Given a network pre-trained on large number of panorama images, our calibration scheme aims to enhance its performance in new, unseen environments by training the network on a small number of test samples. Note the training does not leverage any ground-truth annotations. The calibrated network can produce more accurate 3D maps, which will be beneficial for downstream applications such as navigation.

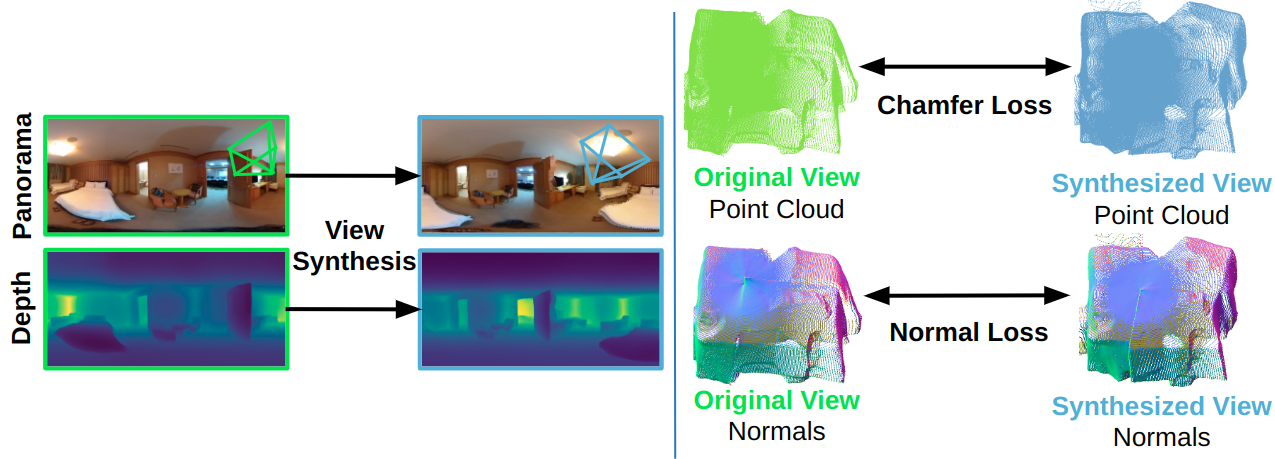

Our calibration scheme utilizes three loss functions. The multi-view loss functions (normal & chamfer) operate by synthesizing panoramas at random pose perturbations. Given the synthesized panoramas and their depth predictions, chamfer loss enforces the point cloud coordinates produced from the two depth maps to be similar, and normal loss additionally enforces the point cloud normals to be similar.

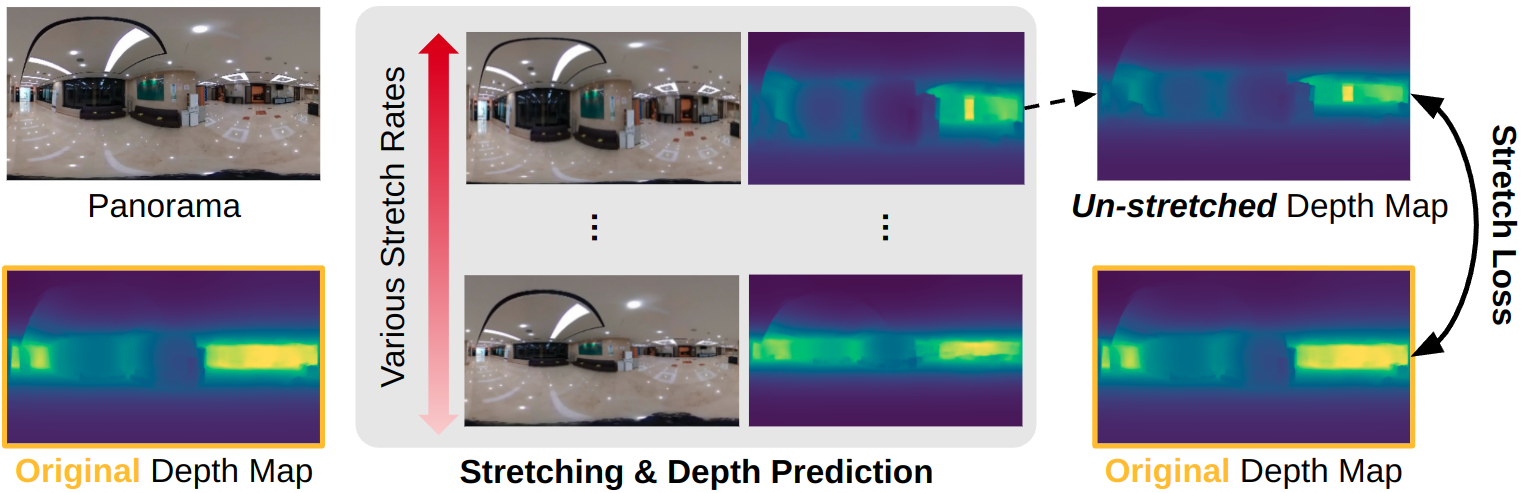

Stretch loss further imposes depth map consistency against stretched panoramas. The loss specifically targets small or large-scale scenes, where we empirically observed depth estimation errors to largely increase compared to other sources of domain shifts. To elaborate, we synthesize panoramas at various stretch rates, and impose depth map consistencies against each stretched panorama.

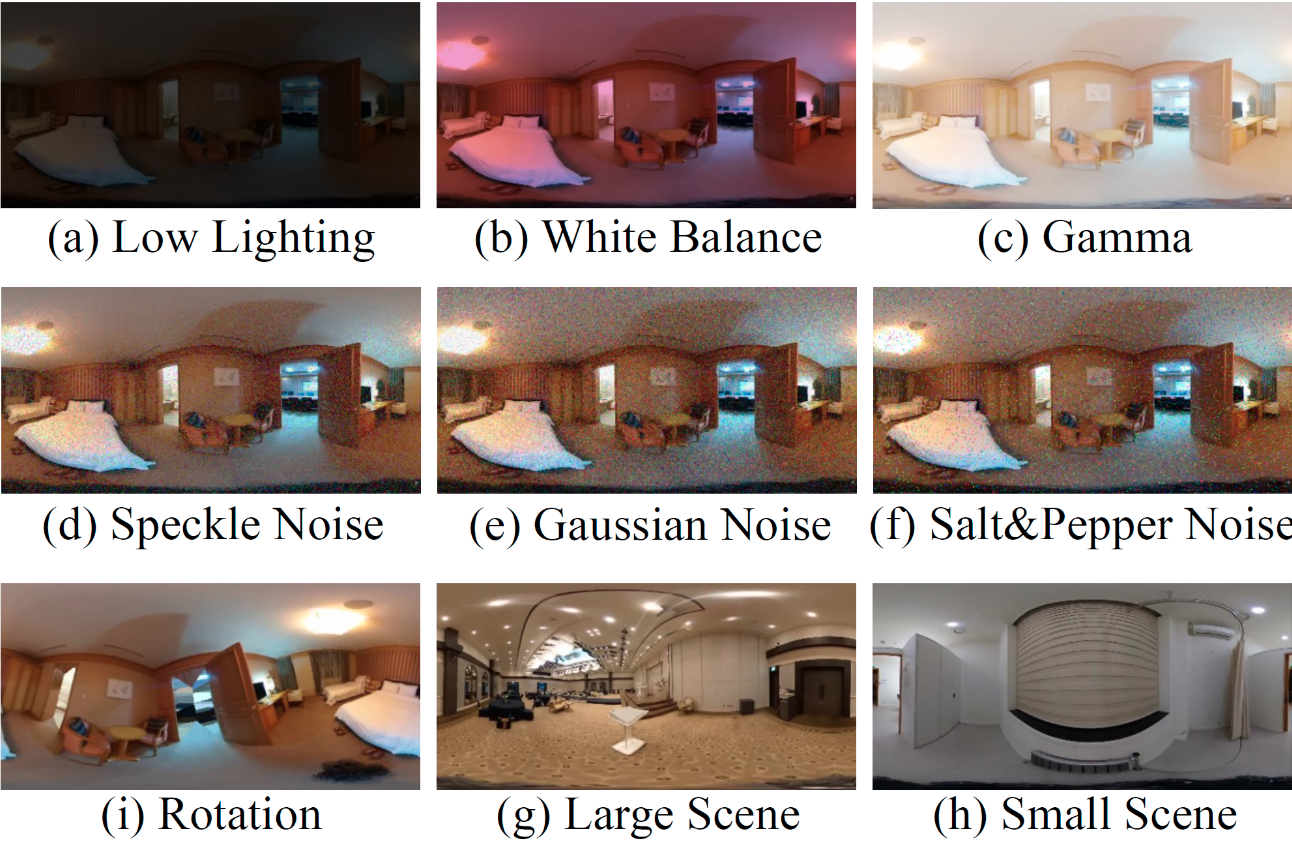

We test our calibration method on a wide variety of domain shifts that could occur in robotics or AR/VR applications. Specifically, we consider global lighting changes (image gamma, white balance, low lighting), image noises (gaussian, speckle, salt and pepper), and geometric changes (scene scale change to large/small scenes, camera rotation).

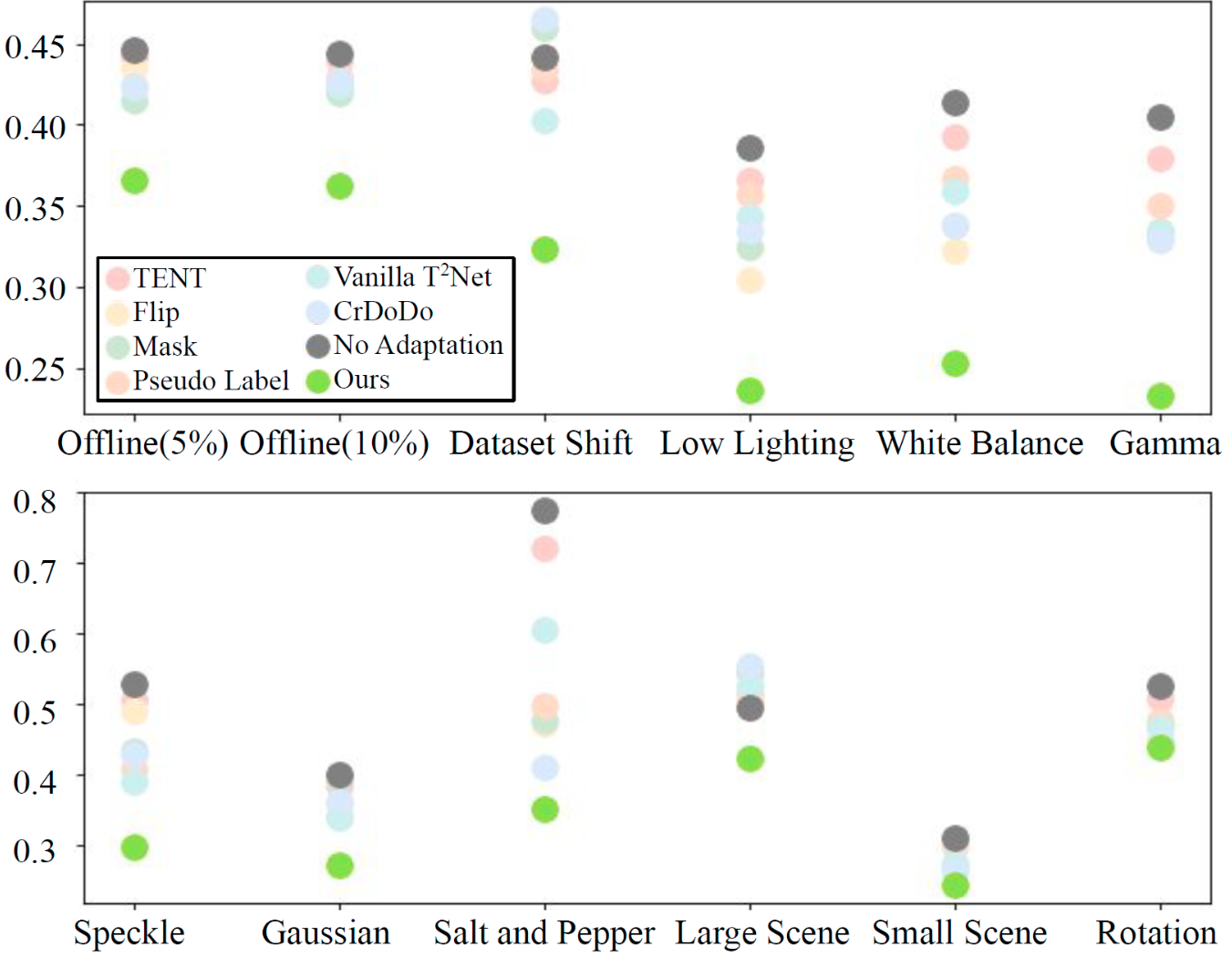

The plot shown here delineates the mean absolute error (MAE) of various adaptation methods tested in the Stanford2D-3D-S and OmniScenes datasets. Our method outperforms the baselines under the aforementioned domain shifts, with more than 10cm decrease in MAE in most cases.

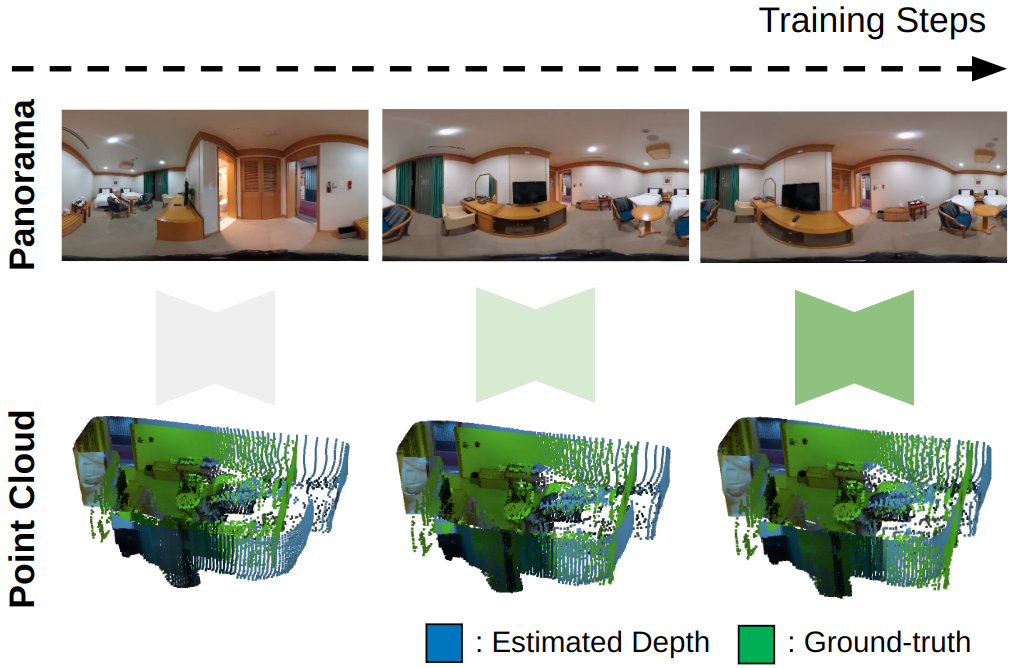

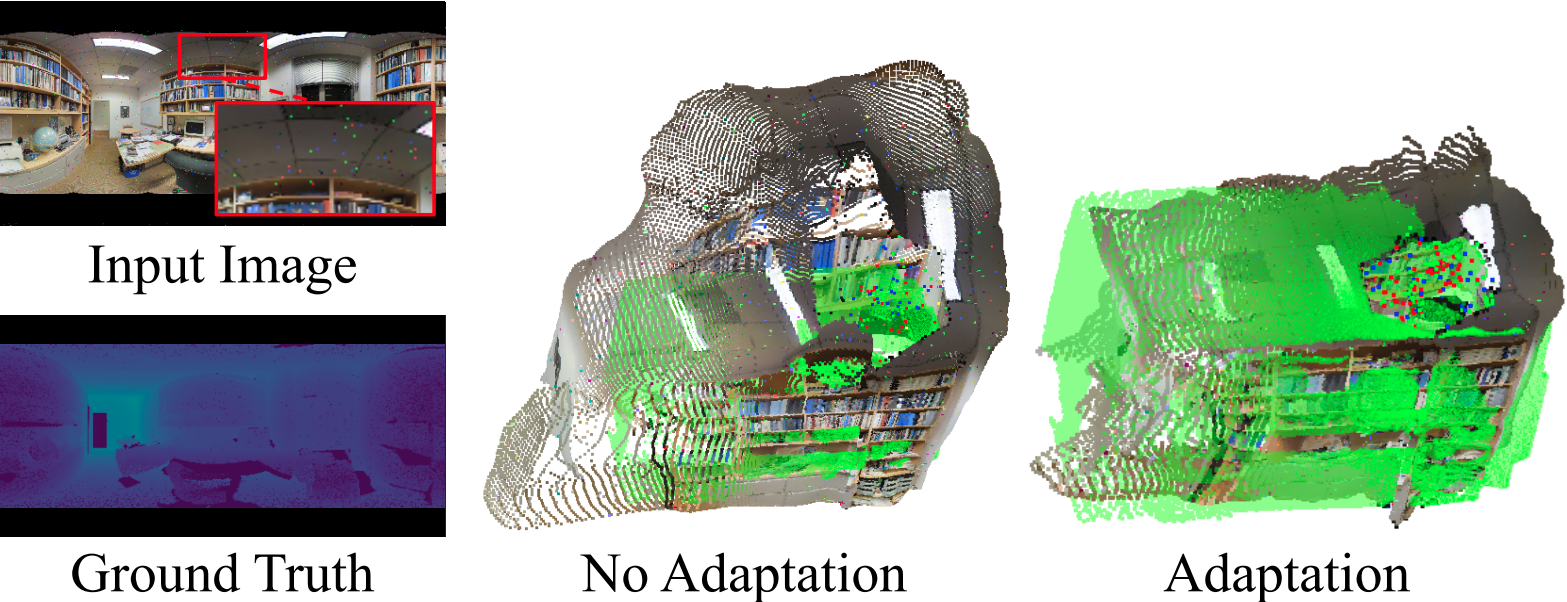

Here we show qualitative results of depth estimation before and after applying our calibration scheme. Prior to calibration, depth maps are very noisy due to the salt and peppr noise shown on the left. By applying our calibration scheme, the depth maps better align with the ground-truth shown in green.

Here's an animation showing the depth estimation results obtained from our calibration scheme. While the initial depth estimation network produces noisy depth estimates, the calibration leads to enhanced depth predictions after a few training iterations.

@InProceedings{Kim_2023_ICCV,

author = {Kim, Junho and Lee, Eun Sun and Kim, Young Min},

title = {Calibrating Panoramic Depth Estimation for Practical Localization and Mapping},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {8830-8840}

}