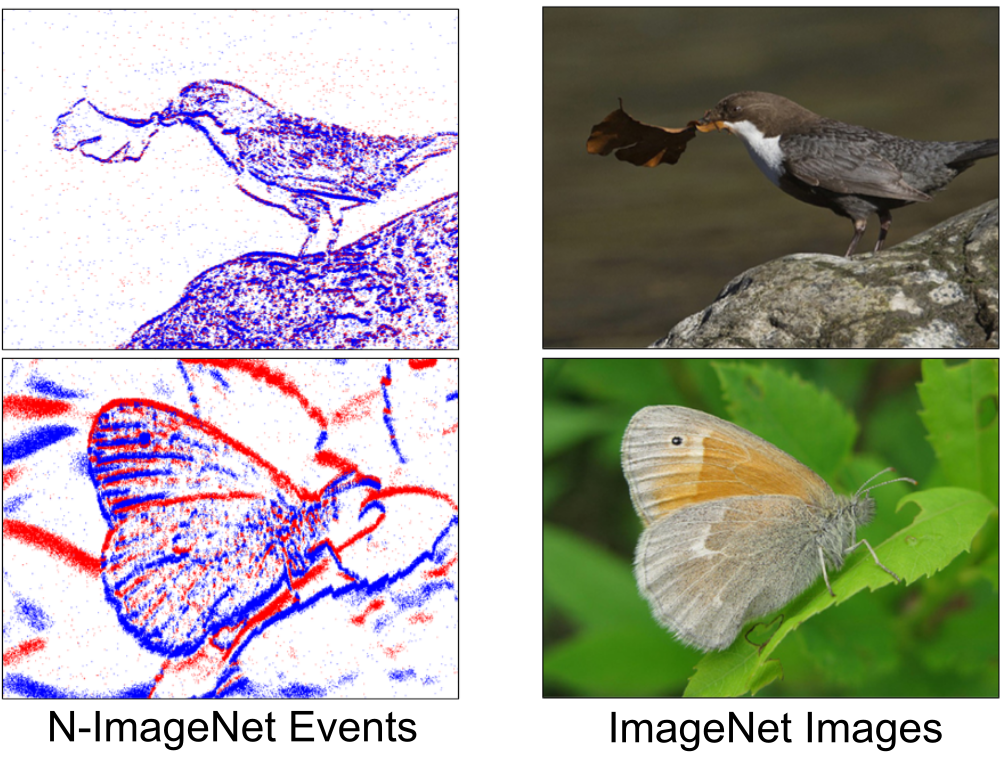

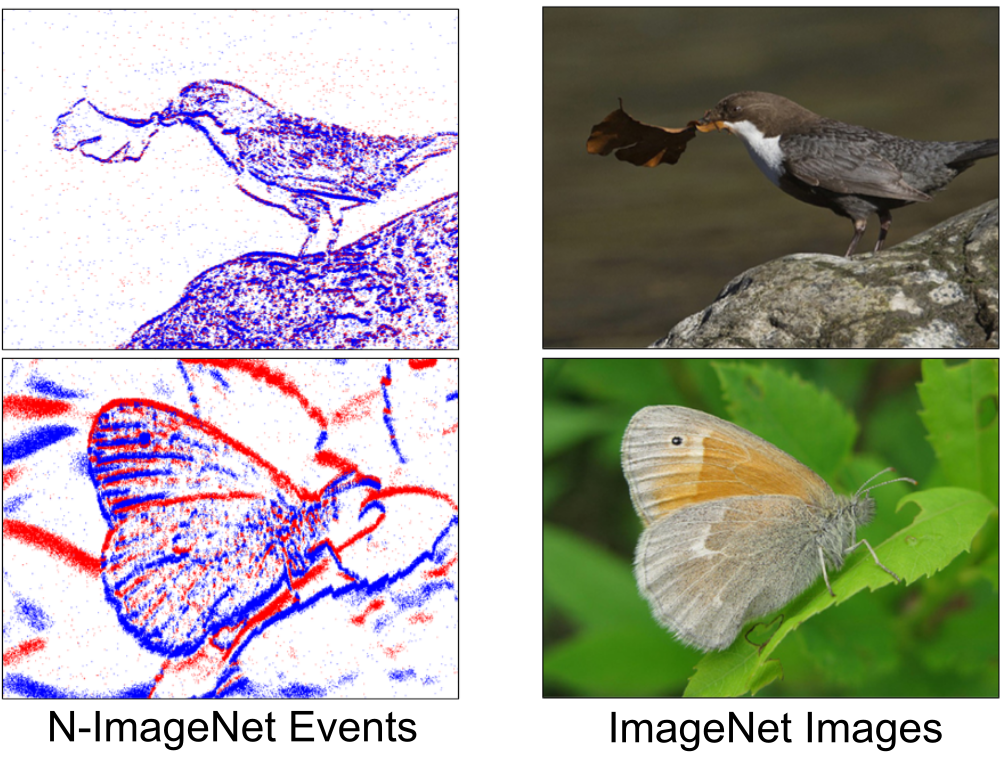

We introduce N-ImageNet, a large-scale dataset targeted for robust, fine-grained object recognition with event cameras. The dataset is collected using programmable hardware in which an event camera consistently moves around a monitor displaying images from ImageNet. N-ImageNet serves as a challenging benchmark for event-based object recognition, due to its large number of classes and samples. We empirically show that pretraining on N-ImageNet improves the performance of event-based classifiers and helps them learn with few labeled data. In addition, we present several variants of N-ImageNet to test the robustness of event-based classifiers under diverse camera trajectories and severe lighting conditions, and propose a novel event representation to alleviate the performance degradation. To the best of our knowledge, we are the first to quantitatively investigate the consequences caused by various environmental conditions on event-based object recognition algorithms. N-ImageNet and its variants are expected to guide practical implementations for deploying event-based object recognition algorithms in the real world.

Event cameras are neuromorphic sensors that encode visual information as a sequence of events. In contrast to conventional frame-based cameras that output absolute brightness intensities, event cameras respond to brightness changes. The following figure shows a visual description of how event cameras function compared to conventional cameras. Notice how brightness changes are encoded as 'streams' in the spatio-temporal domain.

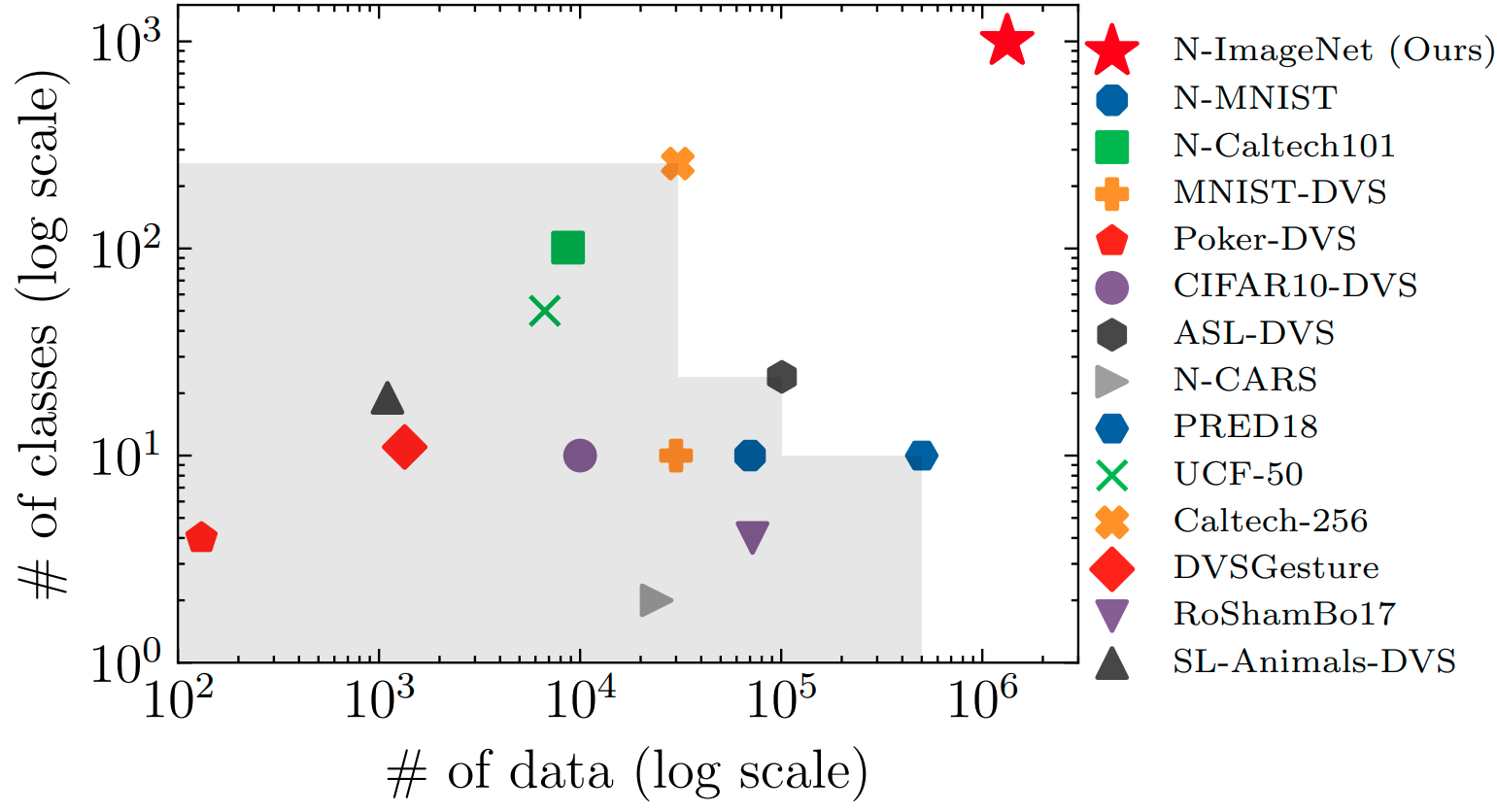

N-ImageNet is a large-scale event dataset that enables training and benchmarking object recongition algorithms using event camera input. The dataset surpasses all existing datasets in both size and label granularity.

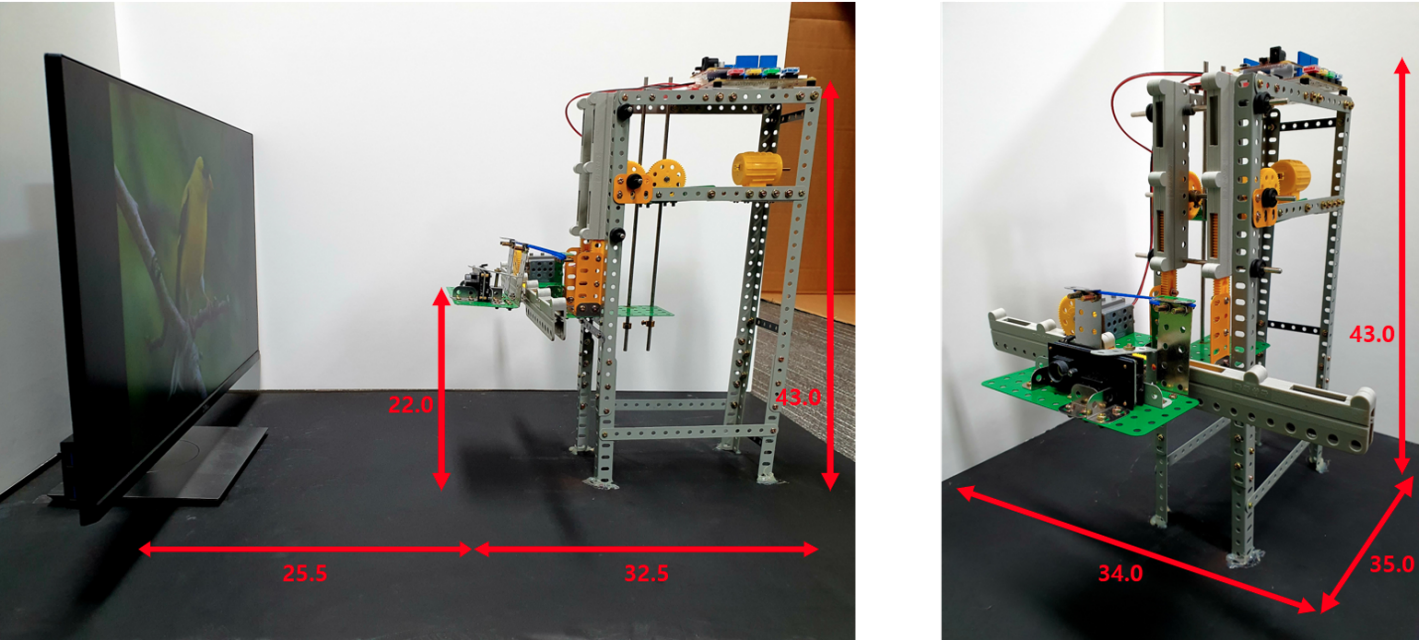

To capture N-ImageNet, we design custom hardware to trigger perpetual camera motion. The device consists of two geared motors connected to a pair of perpendicularly adjacent gear racks where the upper and lower motors are responsible for vertical and horizontal motion, respectively. Each motor is further linked to a programmable Arduino board, which can control the camera movement.

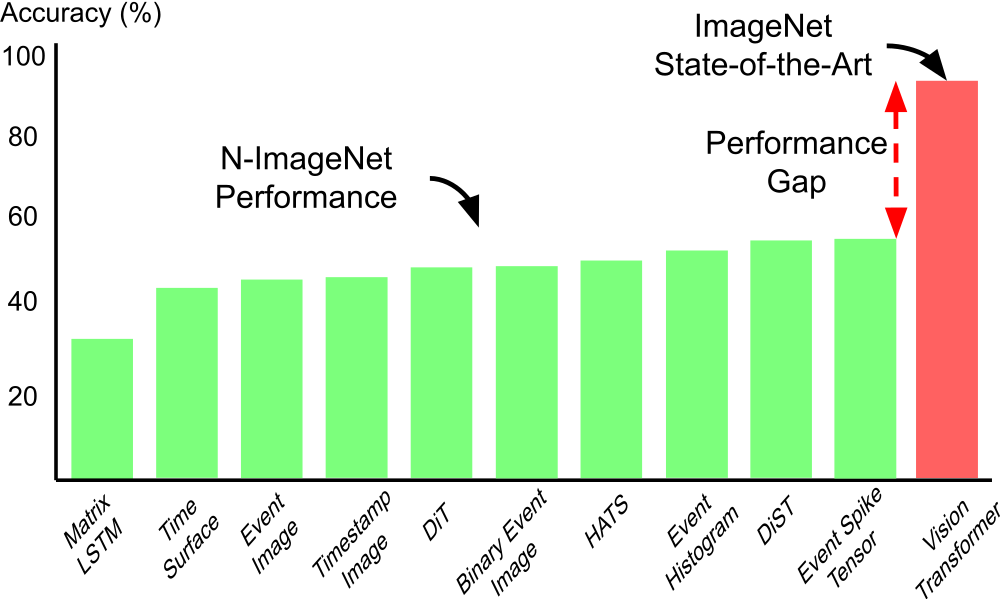

Due to its size and label diversity, N-ImageNet can function as a challenging benchmark for event-based object recognition. The plot below shows the classification accuracy of existing event-based recognition algorithms on N-ImageNet. There exists a large performance gap with the ImageNet state-of-the-art, which suggests that mastering N-ImageNet is still a long way to go. We expect N-ImageNet to foster development of event classifiers that could readily function in the real world.

Public Benchmark: Check out the public benchmark on event-based object recognition available at the following link. Feel free to upload new results to the benchmark!

Downloading Full N-ImageNet: Refer to the following link to download N-ImageNet. Note the full dataset size will be around 400 GB, so prepare a sufficient amount of disk space before downloading! Please leave an email to Junho Kim if you are in urgent need of N-ImageNet and the file share links are not working.

Downloading Mini N-ImageNet: Starting from 2022, we publicly released a smaller version of N-ImageNet, called mini N-ImageNet. The dataset contains 100 classes, which is 1/10 of the original N-ImageNet. We expect the dataset to enable quick and light-weight evaluation of new event-based object recognition methods. To download the dataset, please refer to the follwoing link.

Downloading Pretrained Models: To download the pretrained models, refer to the following link.

@InProceedings{Kim_2021_ICCV,

author = {Kim, Junho and Bae, Jaehyeok and Park, Gangin and Zhang, Dongsu and Kim, Young Min},

title = {N-ImageNet: Towards Robust, Fine-Grained Object Recognition With Event Cameras},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {2146-2156}

}