We introduce Ev-TTA, a simple, effective test-time adaptation algorithm for event-based object recognition. While event cameras are proposed to provide measurements of scenes with fast motions or drastic illumination changes, many existing event-based recognition algorithms suffer from performance deterioration under extreme conditions due to significant domain shifts. Ev-TTA mitigates the severe domain gaps by fine-tuning the pre-trained classifiers during the test phase using loss functions inspired by the spatio-temporal characteristics of events. Since the event data is a temporal stream of measurements, our loss function enforces similar predictions for adjacent events to quickly adapt to the changed environment online. Also, we utilize the spatial correlations between two polarities of events to handle noise under extreme illumination, where different polarities of events exhibit distinctive noise distributions. Ev-TTA demonstrates a large amount of performance gain on a wide range of event-based object recognition tasks without extensive additional training. Our formulation can be successfully applied regardless of input representations and further extended into regression tasks. We expect Ev-TTA to provide the key technique to deploy eventbased vision algorithms in challenging real-world applications where significant domain shift is inevitable.

Event cameras are neuromorphic sensors that encode visual information as a sequence of events. In contrast to conventional frame-based cameras that output absolute brightness intensities, event cameras respond to brightness changes. The following figure shows a visual description of how event cameras function compared to conventional cameras. Notice how brightness changes are encoded as 'streams' in the spatio-temporal domain.

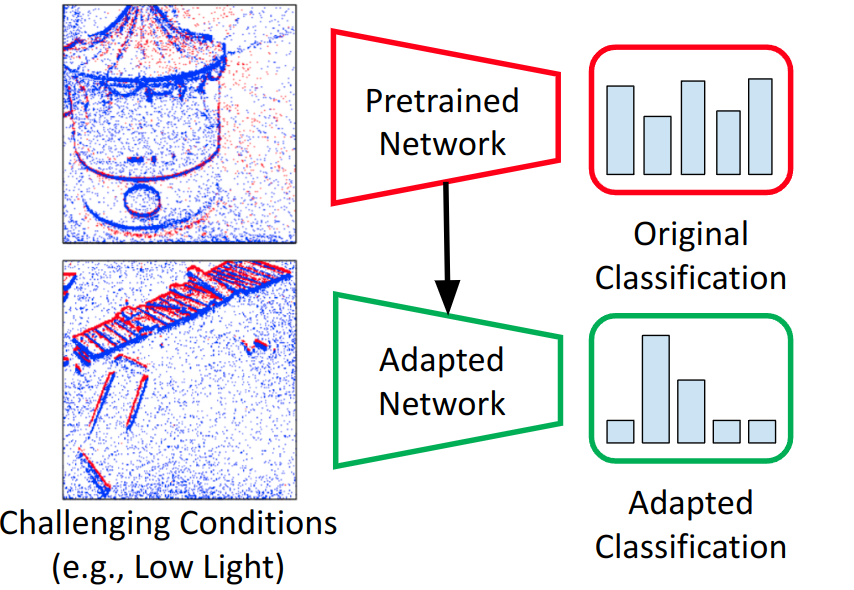

Event cameras can provide measurements in extreme conditions due to the hardware-level benefits such as high dynamic range and temporal resolution. However, the classifiers trained on event captures from normal conditions do not generalize well in such challenging scenarios. Thus a sensing-perception gap exists, where the visual sensor can provide robust measurements while the entailing perception algorithms cannot properly handle the domain gaps.

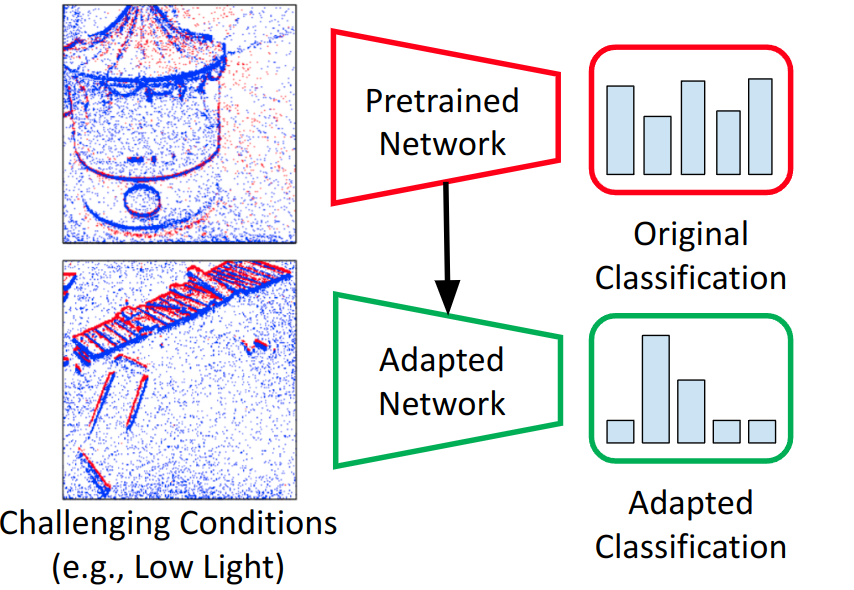

Ev-TTA mitigates the domain gaps from extreme measurement conditions by fine-tuning the classifier during test phase. Consider a model trained with ground truth labels on the source domain as seen on the left which will make low quality predictions in novel, unseen environments. Ev-TTA adapts this model to new conditions without using ground truth labels in an online manner.

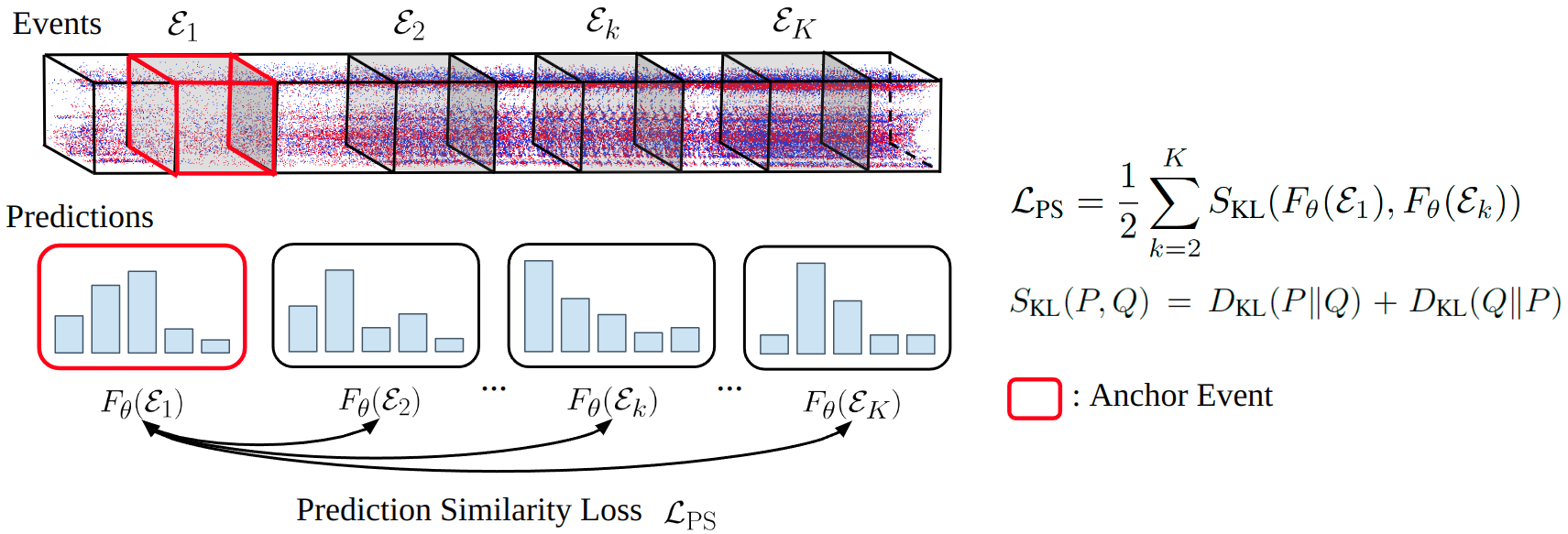

Ev-TTA exploits two loss functions for adaptation. Prediction similarity loss enforces the predictions made on various regions in the event stream to follow the prediction on the anchor event which is shown as the red box. The loss is a symmetric KL divergence which penalizes deviations between the anchor event prediction and the rest.

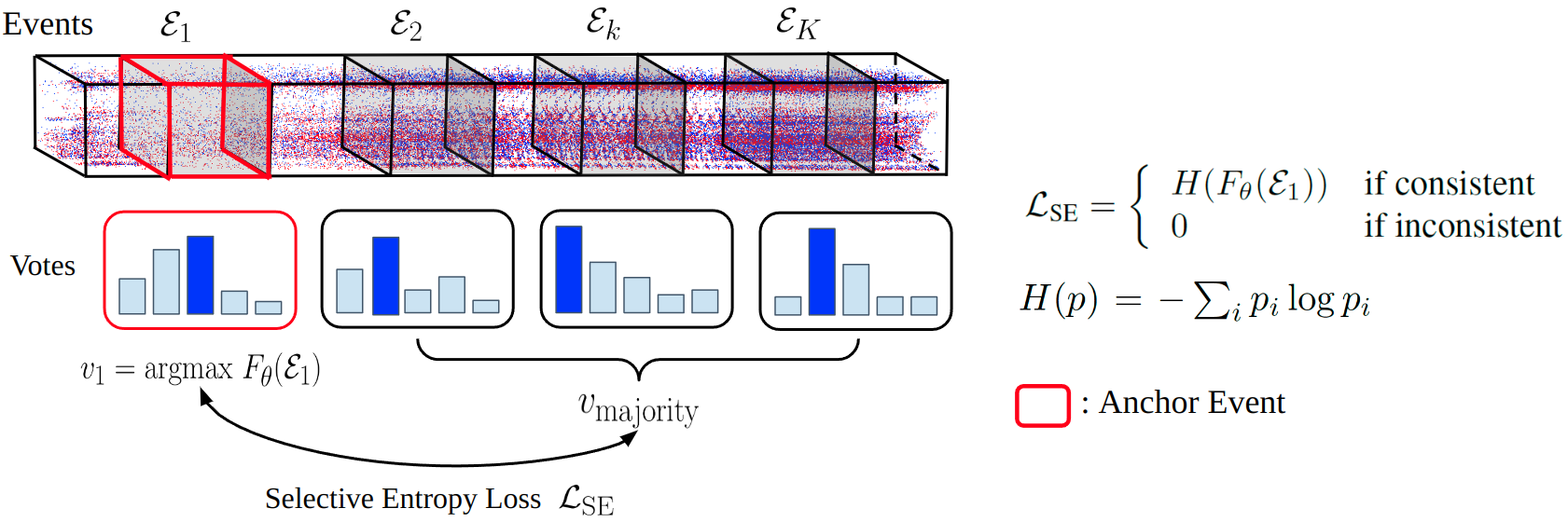

Selective entropy loss enhances the quality of the anchor prediction. The loss minimizes the prediction entropy of the anchor event if its prediction is consistent with predictions made on the neighboring events. Here the consistency is determined by comparing the class prediction of the anchor event against the majority vote made from the neighboring events.

In low light conditions, Ev-TTA additionally performs denoising using spatial consistency. Large amounts of noise on a single event polarity often occur in low light conditions, which can be observed on the negative polarity in the figure. For each event on the polarity where noise occurred, we examine its neighbors in the opposite polarity and if the event lacks any neighbors, we label it as noise and remove it.

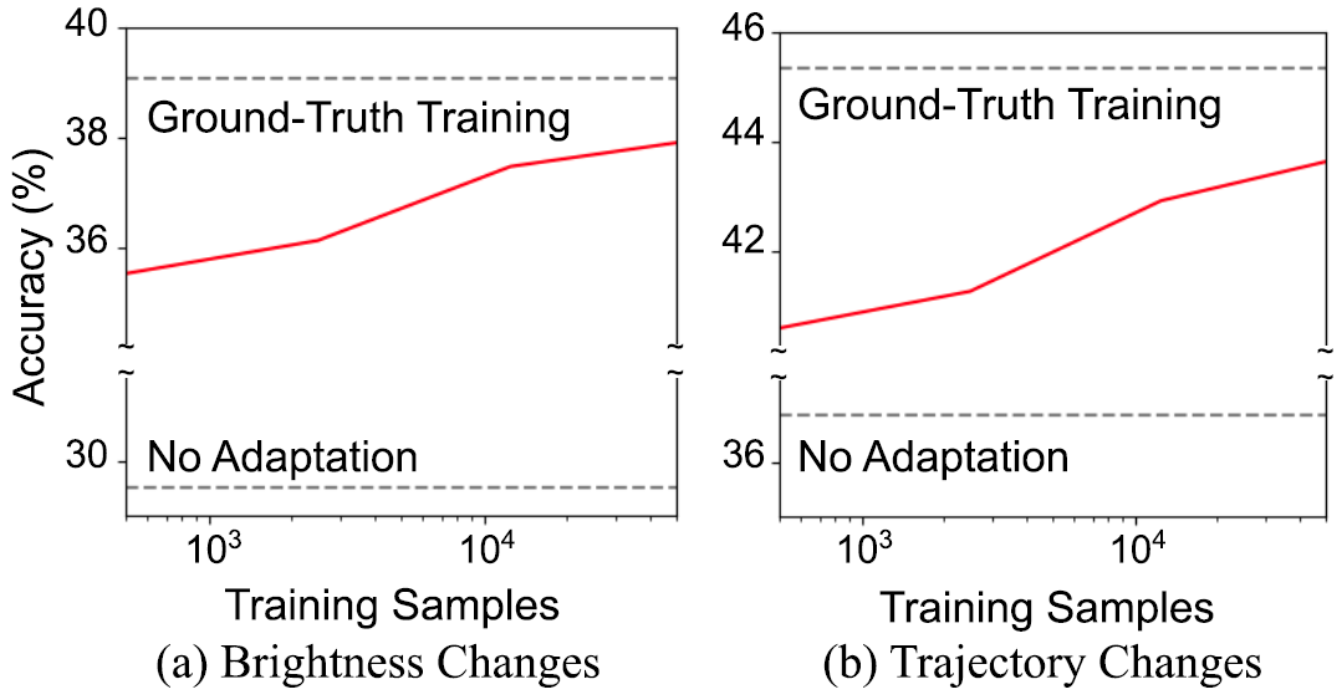

The curves above show the classification accuracy of Ev-TTA measured in N-ImageNet, a large-scale benchmark for event-based object recognition. The dataset contains evaluation splits with events obtained under brightness changes (e.g., low light) and camera trajectory changes (e.g., fast motion). Compared to no adaptation, large amounts of accuracy improvements exist, and the accuracy approaches results from ground-truth training when given more test-time training data.

@InProceedings{Kim_2022_CVPR,

author = {Kim, Junho and Hwang, Inwoo and Kim, Young Min},

title = {Ev-TTA: Test-Time Adaptation for Event-Based Object Recognition},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {17745-17754}

}